AllTracker: Efficient Dense Point Tracking at High Resolution

Adam W. Harley Yang You Xinglong Sun Yang Zheng Nikhil Raghuraman Yunqi Gu Sheldon Liang Wen-Hsuan Chu Achal Dave Pavel Tokmakov Suya You Rares Ambrus Katerina Fragkiadaki Leonidas J. Guibas

ICCV 2025

Overview

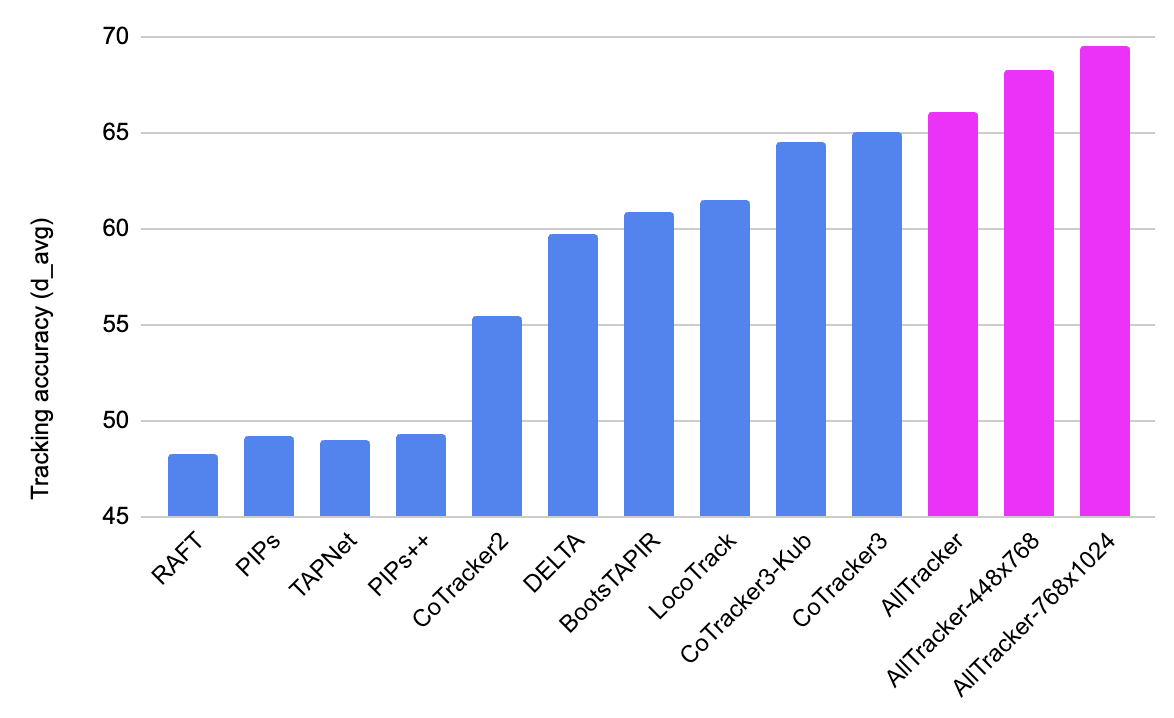

We introduce AllTracker: a model that estimates long-range point tracks by way of estimating the flow field between a query frame and every other frame of a video. Unlike existing point tracking methods, our approach delivers high-resolution and dense (all-pixel) correspondence fields, which can be visualized as flow maps. Unlike existing optical flow methods, our approach corresponds one frame to hundreds of subsequent frames, rather than just the next frame. We develop a new architecture for this task, blending techniques from existing work in optical flow and point tracking: the model performs iterative inference on low-resolution grids of correspondence estimates, propagating information spatially via 2D convolution layers, and propagating information temporally via pixel-aligned attention layers. The model is fast and parameter-efficient (16 million parameters), and delivers state-of-the-art point tracking accuracy at high resolution (i.e., tracking 768x1024 pixels, on a 40G GPU). A benefit of our design is that we can train on a wider set of datasets, and we find that doing so is crucial for top performance. We provide an extensive ablation study on our architecture details and training recipe, making it clear which details matter most. Our code and model weights are available.

Native high-resolution dense tracking

|

Most point trackers operate at either 256x256 or 384x512, and run out of memory at higher resolutions. Our method can operate at 768x1024 on a 40G GPU. Our accuracy reliably scales with resolution. |

Most point trackers also only track sparse points, while we track every pixel. We only subsample our output for the purpose of clear visualization.

|

No subsampling

|

Subsampling rate 2

|

Subsampling rate 4

|

Subsampling rate 8

|

Long-range optical flow, visibility, and confidence, in sliding window

AllTracker works by estimating optical flow between a "query frame" and every other frame of a video, using a sliding-window strategy. We also output visibility and confidence. In relation to other point trackers, this flow formulation is our key novelty, and we make sparse methods (of similar speed and accuracy) redundant. In relation to other optical flow models, the key novelty of our approach is that we solve a window of flow problems simultaneously, instead of frame-by-frame; the information shared within and across windows unlocks the capability to resolve flows across wide time intervals.

|

Input video

|

Optical flow

|

Visibility

|

Confidence

|